3 Data analysis

A total of 8007 results (papers) from 2009-2018 were obtained. The proportion of animal and human movement papers from the total number of scientific papers extracted from WoS was higher in the last years (Table and Figures below; download the code to reproduce them here).

| Year | Movement articles | All articles | Proportion movement/all (\(10^{-4}\)) |

|---|---|---|---|

| 2009 | 485 | 1139611 | 4.25 |

| 2010 | 479 | 1186928 | 4.03 |

| 2011 | 564 | 1262956 | 4.47 |

| 2012 | 666 | 1323677 | 5.03 |

| 2013 | 791 | 1398009 | 5.66 |

| 2014 | 878 | 1438134 | 6.11 |

| 2015 | 978 | 1709898 | 5.72 |

| 2016 | 937 | 1775745 | 5.28 |

| 2017 | 1073 | 1838351 | 5.84 |

| 2018 | 1156 | 1928507 | 5.99 |

Several dimensions of the mov-eco literature were analyzed: research topics, taxonomical groups studied, components of the movement ecology framework studied, tracking devices used, software tools used, and statistical methods applied. Depending on the dimension, we either analyzed the title, keywords, abstract or material and methods (M&M). The sections used for each aspect of the analysis are detailed in the following table.

| Dimension | Title | Keywords | Abstract | M&M |

|---|---|---|---|---|

| Topics | X | |||

| Taxonomy | X | X | X | |

| Framework | X | X | X | |

| Devices | X | X | X | X |

| Software | X | X | X | X |

| Methods | X | X | X | X |

Table 3.2. Paper sections used to analyze each dimension.

3.1 Topic analysis

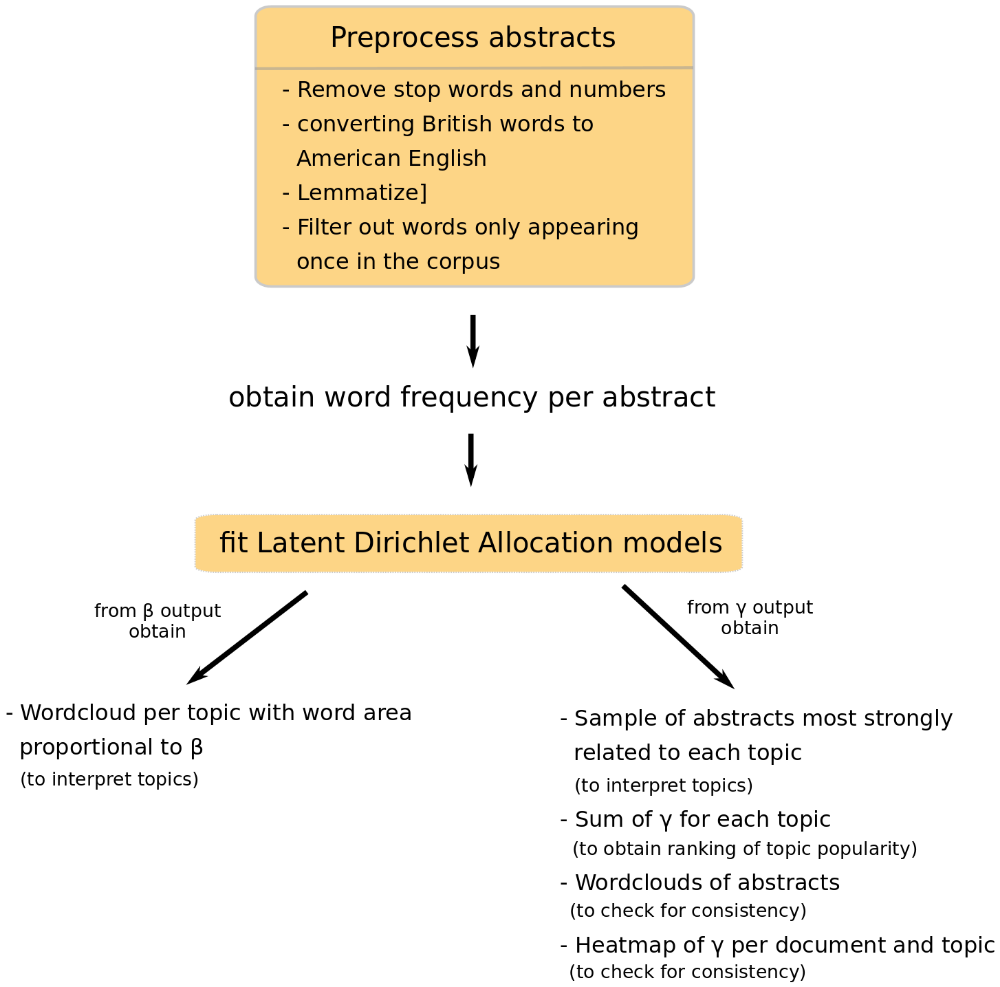

Fig. 3.2. Stages of topic analysis.

The topics were not defined a priori. Instead, we fitted Latent Dirichlet Allocation (LDA) models to the abstracts (Blei, Ng, and Jordan (2003)).

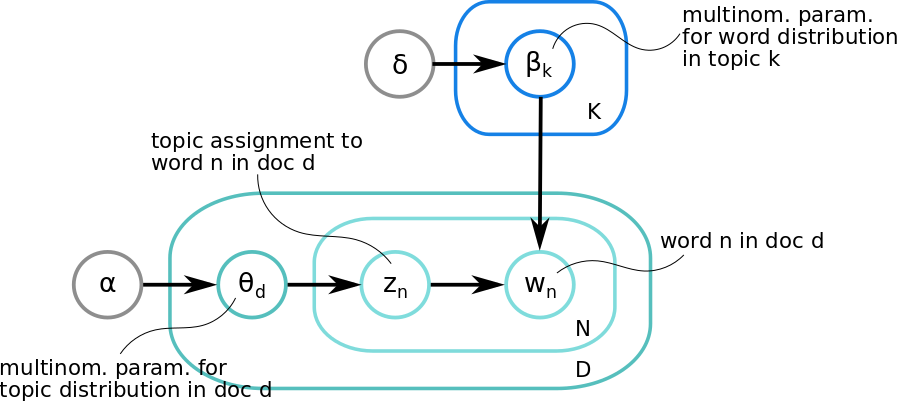

3.1.1 The model

LDAs are Bayesian mixture models that assume the existence of a fixed number \(K\) of topics behind the abstracts. Each topic can be characterized by a multinomial distribution of words with parameter \(\beta\), drawn from a Dirichlet distribution with parameter \(\gamma\). Each document \(d \in {1, ..., D}\) is composed by a mixture of topics, drawn from a multinomial distribution with parameter \(\theta\), which is drawn from a Dirichlet distribution with parameter \(\alpha\). For each word \(w\) in document \(d\), first a hidden topic \(z\) is selected from the multinomial distribution with parameter \(\theta\). From the selected topic \(z\), a word is selected based on the multinomial distribution with parameter \(\beta\). The log-likelihood of a document \(d = \{w_1,...,w_N\}\) is \(l(\alpha,\beta) = \log(p(d|\alpha,\beta)) = \log\int\sum_z\left[\prod_{n=1}^{N} p(w_i|z_i,\beta)p(z_i|\theta)\right]p(\theta|\alpha)d\theta\)

Here we used the LDA model with variational EM estimation (Wainwright and Jordan (2008), Blei, Ng, and Jordan (2003)) implemented in the topicmodels package. All the details of the model specification and estimation are in Grün and Hornik (2011).

The model assumes exchangeability (i.e. the order of words is negligible), that topics are uncorrelated, and that the number of topics is known.

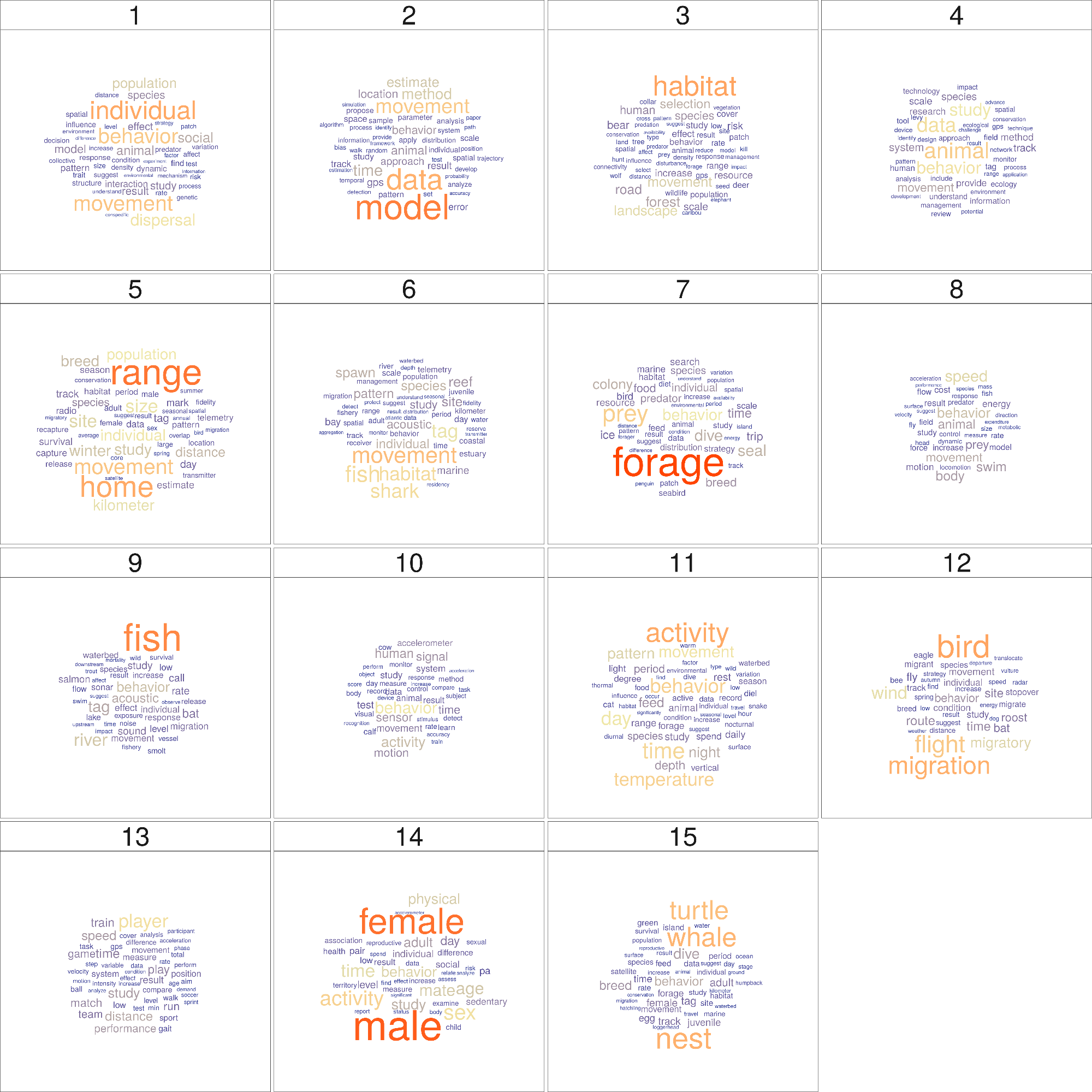

The most commonly used criterion to choose a number of topics is the perplexity score or likelihood of a test dataset (De Waal and Barnard (2008)). Basically, this quantity measures the degree of uncertainty a language model has when predicting some new text (for this study, a new abstract of a paper). Lower values of the perplexity is good and it means the model is assigning higher probabilities. However, the perplexity score measures predictive capacities, rather than having actual humanly-interpretable latent topics (Chang et al. (2009)). In fact, using this score could result in there being too many topics; see Griffiths and Steyvers (2004) who analyzed PNAS abstracts and obtained 300 topics. Hence, we decided to fix the number of topics to 15, as a reasonable value that would not be too large than we could not interpret them, or too small that the topics were too general.

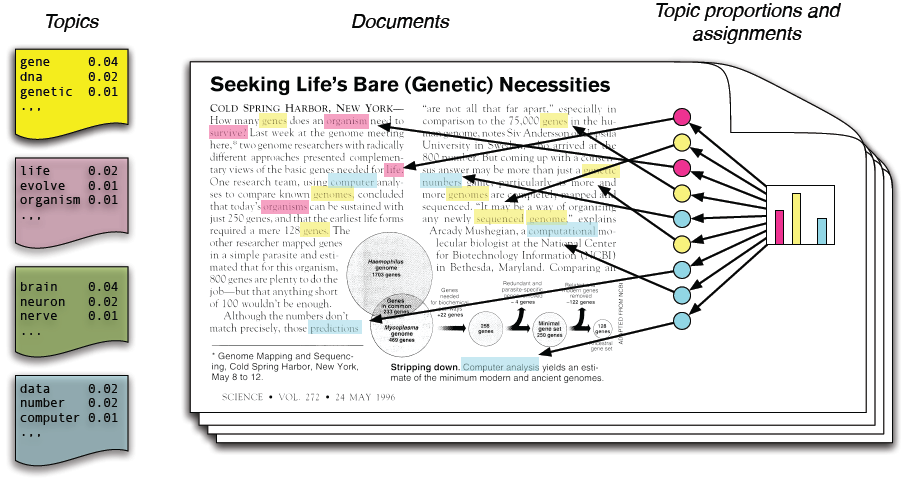

Fig. 3.3. Schematic representation of the links between words, documents and topics. Each document is a mixture of topics. Each topic is modeled as a distribution of words. Each word comes out of one of these topics. Source of the image: Blei, D.M. 2012. Probabilistic topic models. Communications of the ACM, 55(4), 77-84.

Fig. 3.4. Schematic representation of the Latent Dirichlet model described above.

3.1.2 Preprocessing

To improve the quality of our LDA model outputs, we cleaned the data by 1) removing redundant words for identifying topics (e.g. prepositions and numbers), 2) converting all British English words to American English so they would not be seen as different words, 3) lemmatizing (i.e. extracting the lemma of a word based on its intended meaning, with the aim of grouping words under the same lemma) (Ingason et al. (2008)), 4) filtering out words that were only used once in the whole set of abstracts. R packages tidytext (Silge and Robinson (2016)), tm and textstem (Rinker (2018)) were used in this stage (click to download).

3.1.3 Model fitting

The parameter estimates of the LDA model were obtained by running 20 replicates of the models (with the VEM estimation method), and keeping the one with the highest likelihood; click here to download the code.

3.1.4 Model outputs

From the fitted LDA model, we can obtained:

\(E(\beta | z,w)\), as the posterior expected values of word distribution per topic, denoted by \(\hat{\beta}\), and

\(E(\theta\_d | z)\), the posterior topic distribution per abstract, denoted by \(\gamma\) in the package.

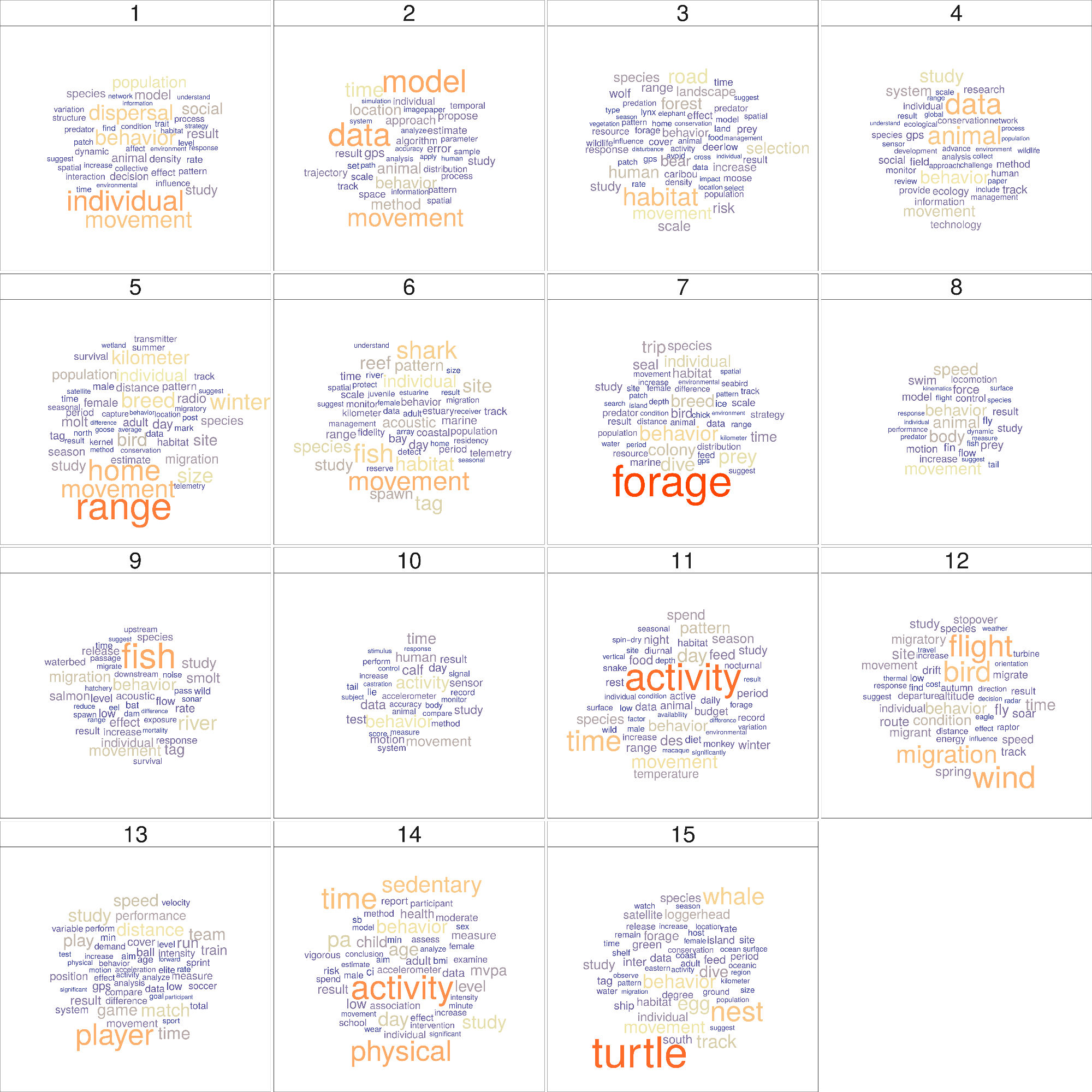

The \(\hat{\beta}\) values were thus a proxy of the importance of a word in a topic. They were used to interpret and label each topic, and to create wordclouds for each topic, where the area occupied by each word was proportional to its \(\hat{\beta}\) value.

Since \(\gamma\) indicated the degree of association between an abstract and a topic, we obtained a sample (click to download) of the 5 most associated abstracts to each topic, to aid the interpretation of the topics.

Based on these outputs, the topics were interpreted as: 1) Dispersal, 2) Movement models, 3) Habitat selection, 4) Detection and data, 5) Home ranges, 6) Aquatic systems, 7) Foraging in marine megafauna, 8) Biomechanics, 9) Acoustic telemetry, 10) Experimental designs, 11) Activity budgets, 12) Migration, 13) Sports, 14) Human activity patterns, 15) Breeding ecology. For an extended description of these topics, see the main text of the manuscript. The sum of \(\gamma\) values for each topic (\(\sum_d E(\theta_d | z_k)\) for each \(k\)) served as proxies of the “prevalence” of the topic relative to all other topics and were used to rank them.

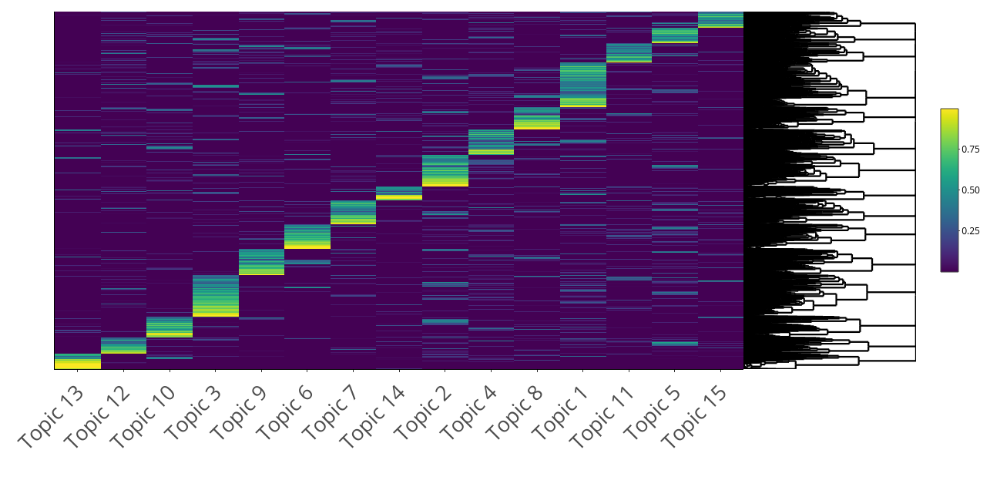

A heatmap of the \(\gamma\) values also showed that most papers were evidently more associated to one topic and few were split into several topics.

3.1.5 Model assessment

We assess the consistency and interpretation of the LDA results via internal and external expert judgement, respectively.

3.1.5.1 Consistency

In the heatmap, we showed that most papers were more strongly associated to one topic. To check for consistency, for each topic, we compared its wordcloud with one obtained from abstracts that were highly associated with the topic (\(\gamma > 0.75\)). The two wordclouds should be telling a very similar story, thus visually resemble, with very small differences due to the abstracts being composed –in a small proportion –by other topics as well. We selected the papers with \(\gamma > 0.75\), and computed the number of times each unique word occurred in the abstracts related to the topic. We divided those values by the total number of words in the topic to get a relative frequency \(\eta\). We then created wordclouds for each topic, where the area occupied by each word was proportional to its \(\eta\) value. These wordclouds were overall consistent with the topic wordclouds, i.e. most words were the same, and the words that differ provided complementary information to interpret the topics and understand to which types of abstracts they were strongly associated to.

3.1.5.2 Interpretation

To assess the interpretability of the topics, we performed a word intrusion analysis, i.e. people are given the task to identify a word injected into the top‐terms of each topic. For each topic, we identified the 4 highest-probability words (click to download), i.e. with the highest \(\hat{\beta}\) values. We did not use a higher number of words in the word intrusion tests as we considered that it could have required more effort from the researchers who kindly and voluntarily participated in this process. Then, we took a high-probability word from another topic –that was not ‘movement’ or ‘behavior’ as they were highly related to most topics –and added it to the group; download file with intruder here. We asked 10 researchers in the field to identify the intruder in each group of words without any more explanations, and suggested them to answer fast so that they would not have to overthink; their answers are here. We then computed the number of correct answers for each topic. A high score for a topic would indicate that the topic was easy to interpret using the 4 highest associated words to it.

The scores are shown in the table below. The codes written for this analysis are downloadable clicking here.

| Topic number | Topic label | Score (from 0 to 10) |

|---|---|---|

| 1 | Social interactions and dispersal | 7 |

| 2 | Movement models | 10 |

| 3 | Habitat selection | 9 |

| 4 | Detection and data | 5 |

| 5 | Home ranges | 8 |

| 6 | Aquatic systems | 8 |

| 7 | Foraging in marine megafauna | 10 |

| 8 | Biomechanics | 0 |

| 9 | Acoustic telemetry | 1 |

| 10 | Experimental designs | 3 |

| 11 | Activity budgets | 2 |

| 12 | Avian migration | 9 |

| 13 | Sports | 0 |

| 14 | Human activity patterns | 5 |

| 15 | Breeding ecology | 4 |

Table 3.3. Word intrusion score for each topic.

Topics 1, 2, 3, 5, 6, 7 and 12 got high scores (>7). A few of the low-scored topics (\(\leq 5\)) had a word intrusion that could be considered relatively general for the researchers thus confusing, such as “tag” (topic 4) and “data” (topics 9 and 15). Moreover, the two topics highly associated with humans, 13 and 14, also had low scores that could be a consequence of the researchers (all ecologists) not expecting any human related group. Overall, this analysis shows that only half of the topics are easily interpretable using the 4 highest-probability words. Even for us, the researchers involved in this study, it was necessary to look at the abstracts with the highest association to each topic in order to be sure of the interpretation of some of the topics.

An alternative approach to model assessment in topics is through topic prediction on an independent data set, but this should be performed when the goal of the study is to predict over new data sets, which is not the case here.

The word intrusion approach is not an exhaustive assessment of topic interpretability, but it allows putting our results into perspective: some topics have a clear and easy interpretation and some others are really hard to interpret.

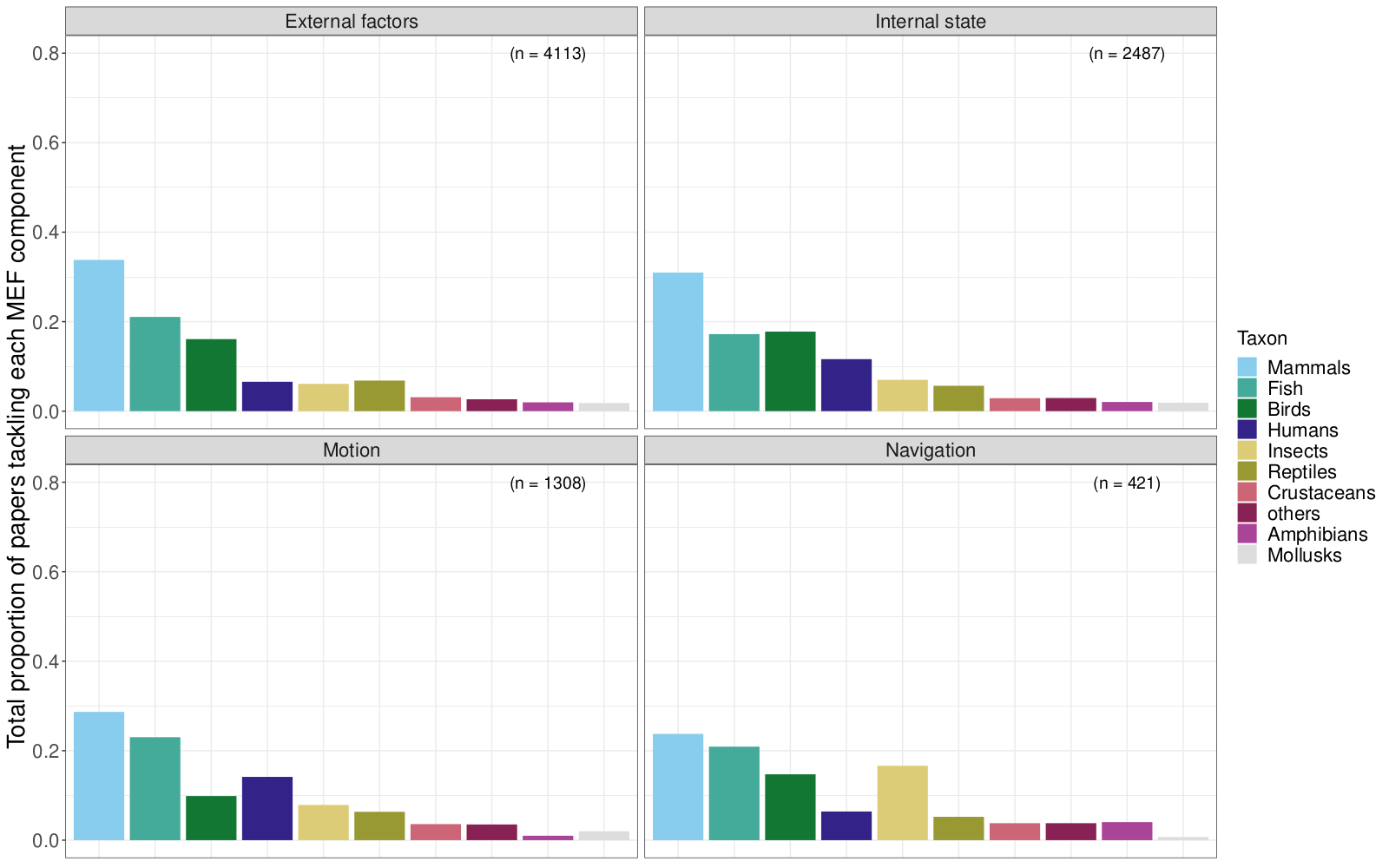

3.2 Taxonomical identification

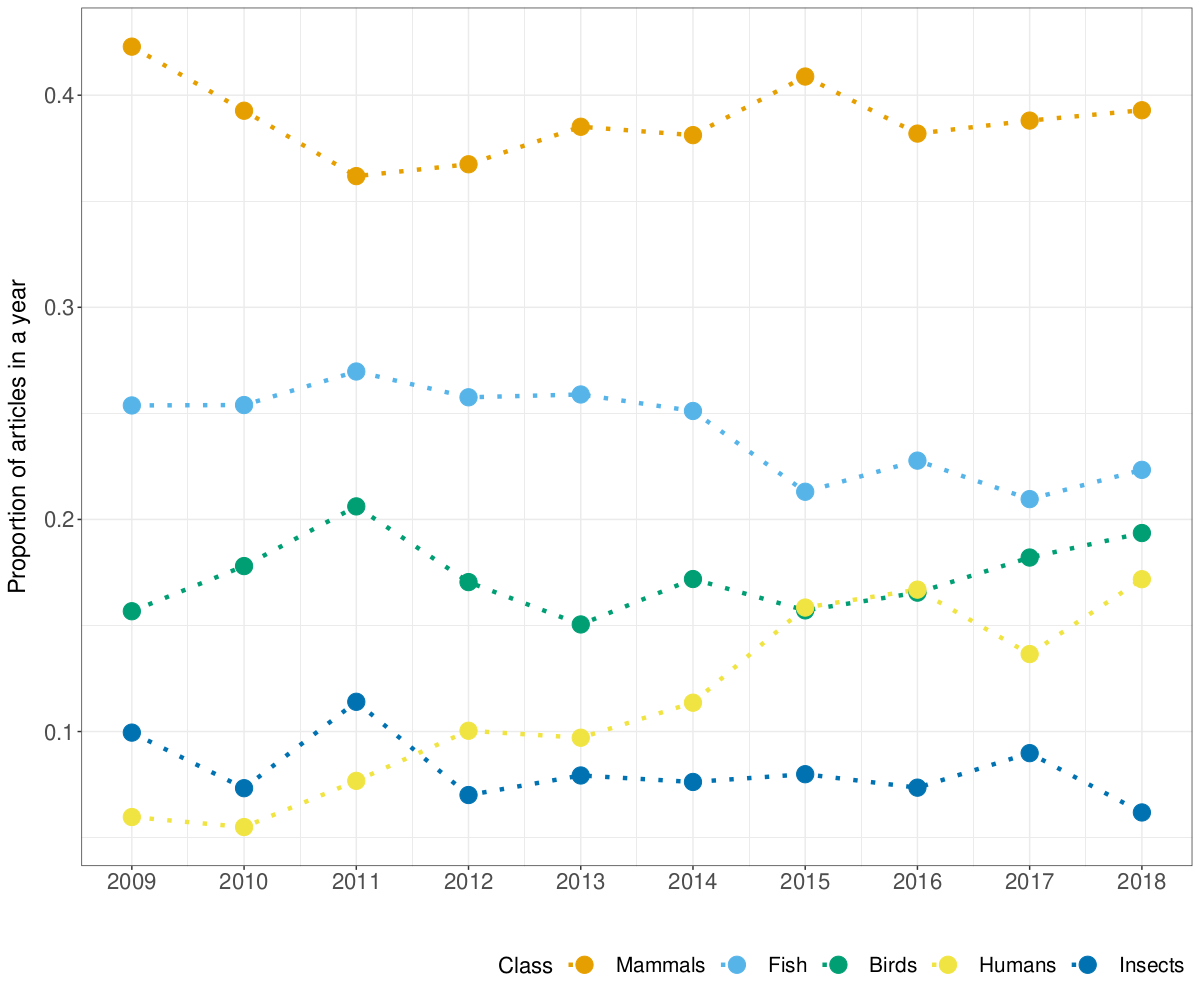

To identify the taxonomy of the organisms studied in the papers, the ITIS (Integrated Taxonomic Information System) database (USGS Core Science Analytics and Synthesis) was used to detect names of any animal species (kingdom Animalia) that were mentioned in the abstracts, titles and keywords. We screened these sections for latin and common (i.e., vernacular) names of species (both singular and plural), as well as common names of higher taxonomic levels such as orders and families. We excluded ambiguous terms that are used as common names for taxa but also have a current language meaning; for example: “Here,” “Scales,” “Costa,” “Ray,” etc. Because we wanted to consider humans as a separate category, we excluded “Homo sapiens” from the search terms, but used the following non-ambiguous terms to identify papers that focus on movement ecology of humans:

"player", "players", "patient", "patients", "child", "children",

"teenager", "teenagers", "people", "student", "students", "fishermen", "person",

"tourist", "tourists", "visitor", "visitors", "hunter", "hunters", "customer",

"customers", "runner", "runners", "participant", "participants", "cycler",

"cyclers", "employee", "employees", "hiker", "hikers", "athlete", "athletes",

"boy", "boys", "girl", "girls", "woman", "women", "man", "men", "adolescent",

"adolescents". In cases where words may be suffixes of larger words, we used regular expression notation to exact match words, e.g ‘man’ must match only the word ‘man’ and not ‘manually.’ We excluded words that could have an ambiguous meaning: “passenger” may appear in papers that mention passenger pigeons; “driver” may be used to refer to a causing factor.

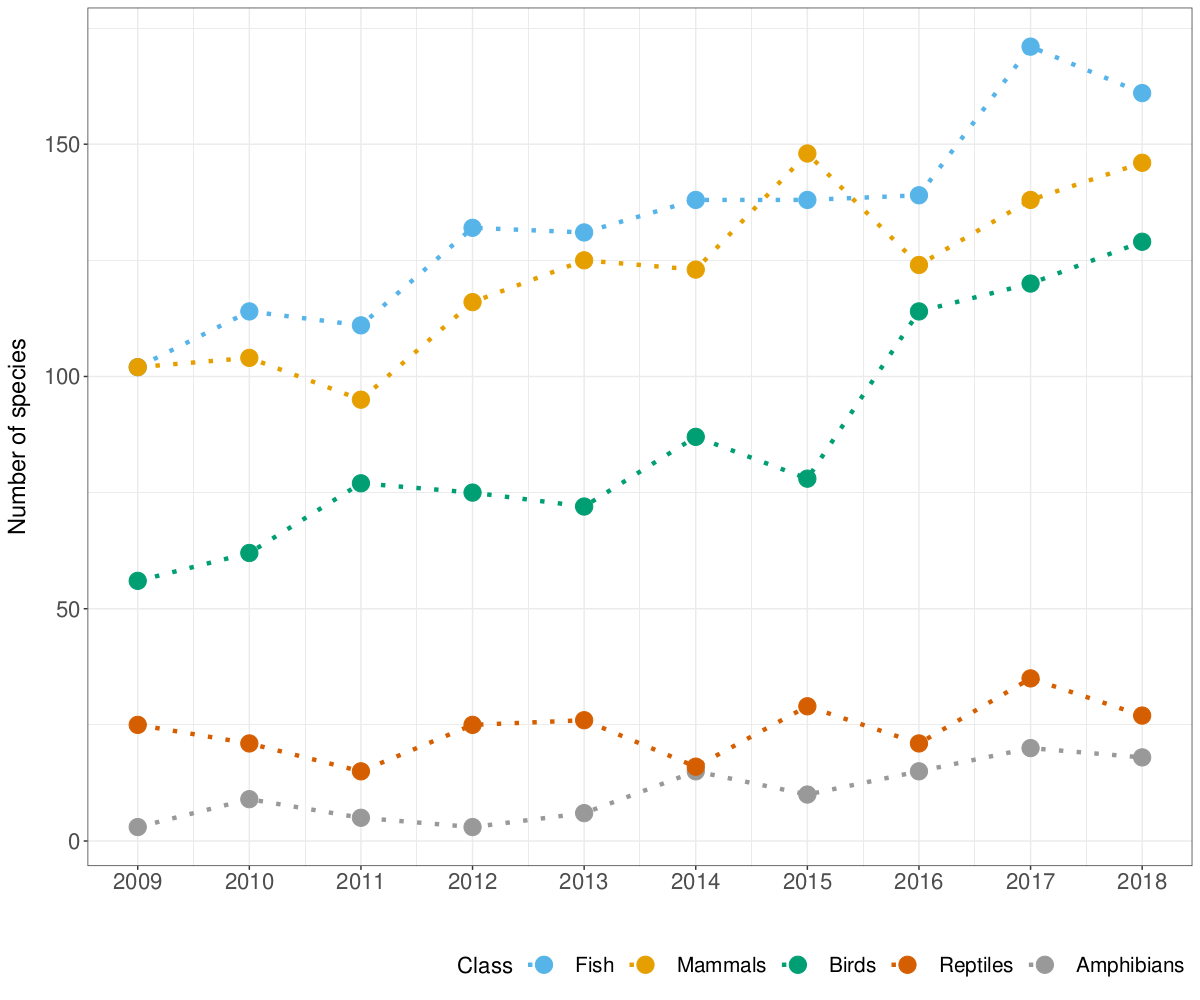

After having identified any taxon mentioned in a paper, we summarized taxa at the Class level (except for superclasses Osteichthyes and Chondrichthyes which we merged into a single group labeled Fish, and for classes within the phylum Mollusca and the subphylum Crustacea which we considered collectively). Thus, each paper was classified as focusing on one or more class-like groups, as in Holyoak et al. (2008): Fish, Mammals, Birds, Reptiles, Amphibians, Insects, Crustaceans, Mollusks, and others. For the purpose of our analysis, we kept humans as a separate category and did not count them within Class Mammalia.

The quality control procedure consisted in selecting a random sample of 100 abstracts and verifying that the common taxonomical group was correctly identified. The accuracy was 93%. The code for taxonomical identification can be downloaded here.

3.2.1 Outputs

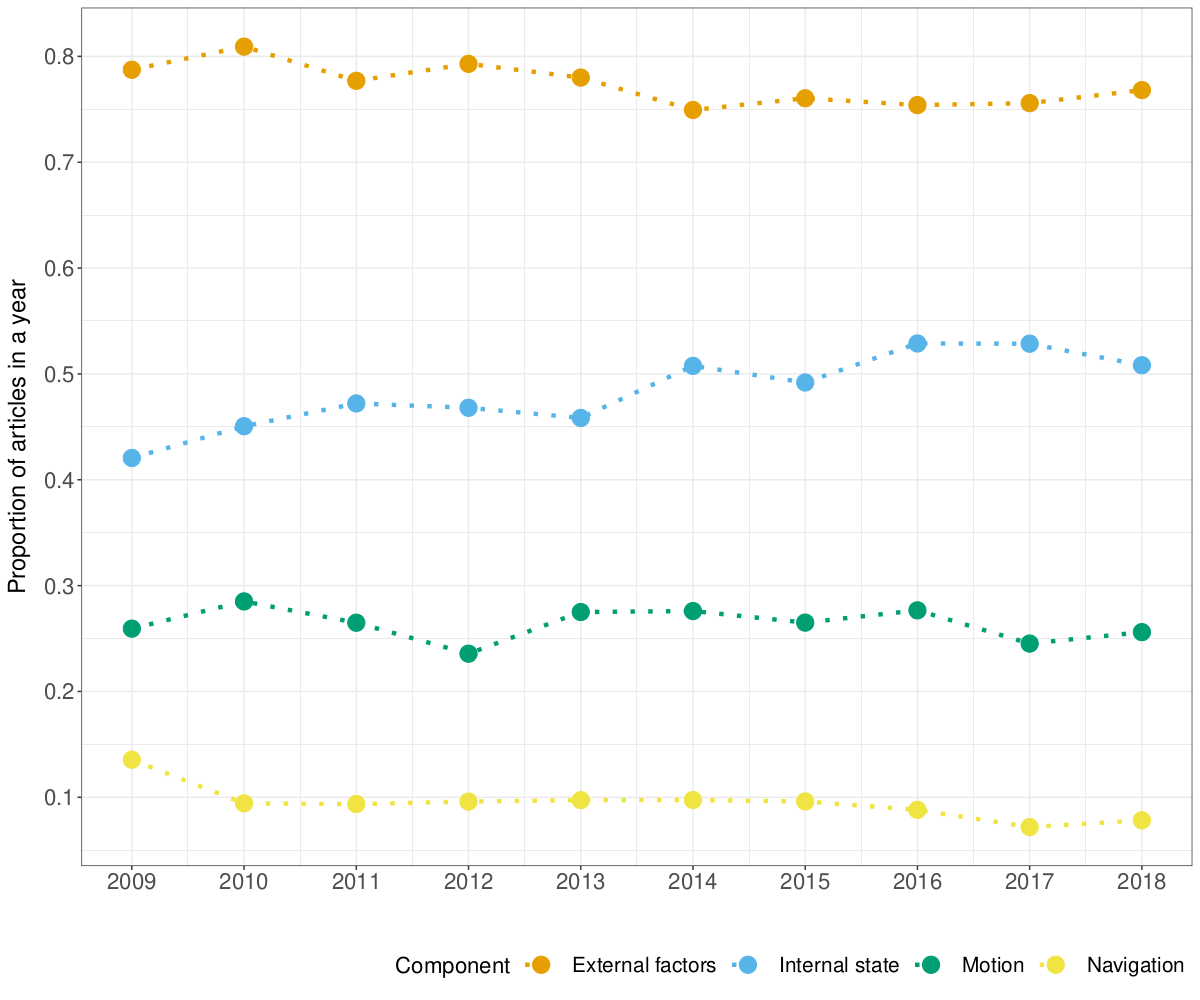

3.3 Movement ecology framework (MEF)

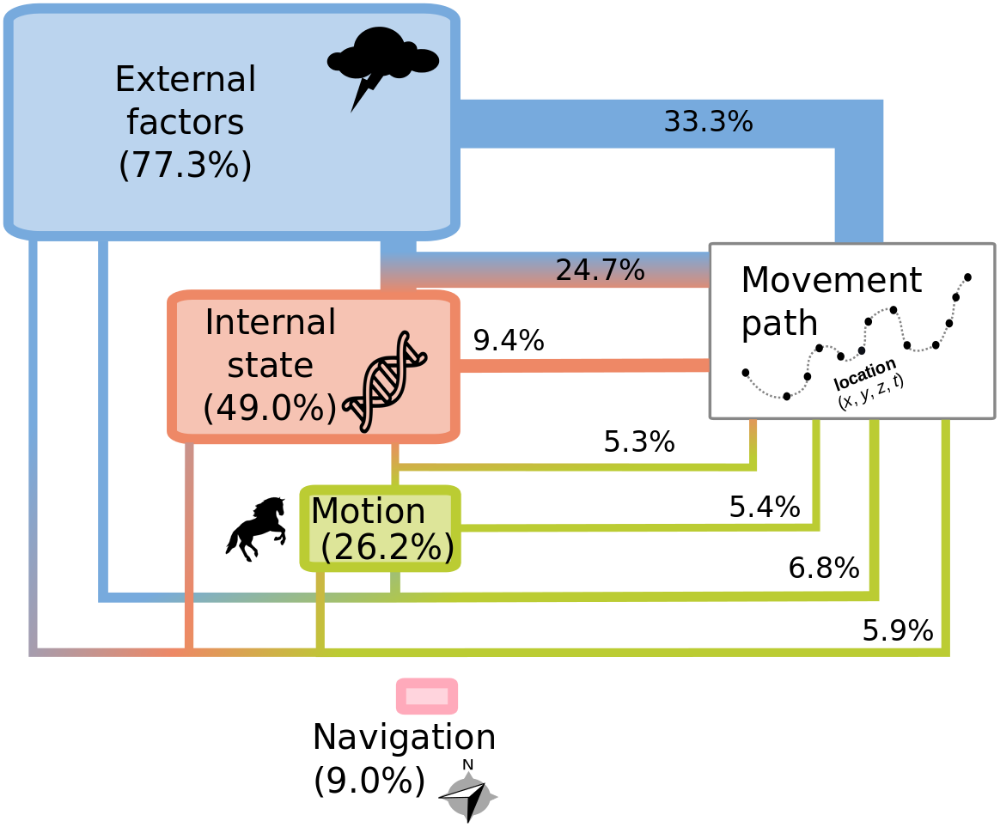

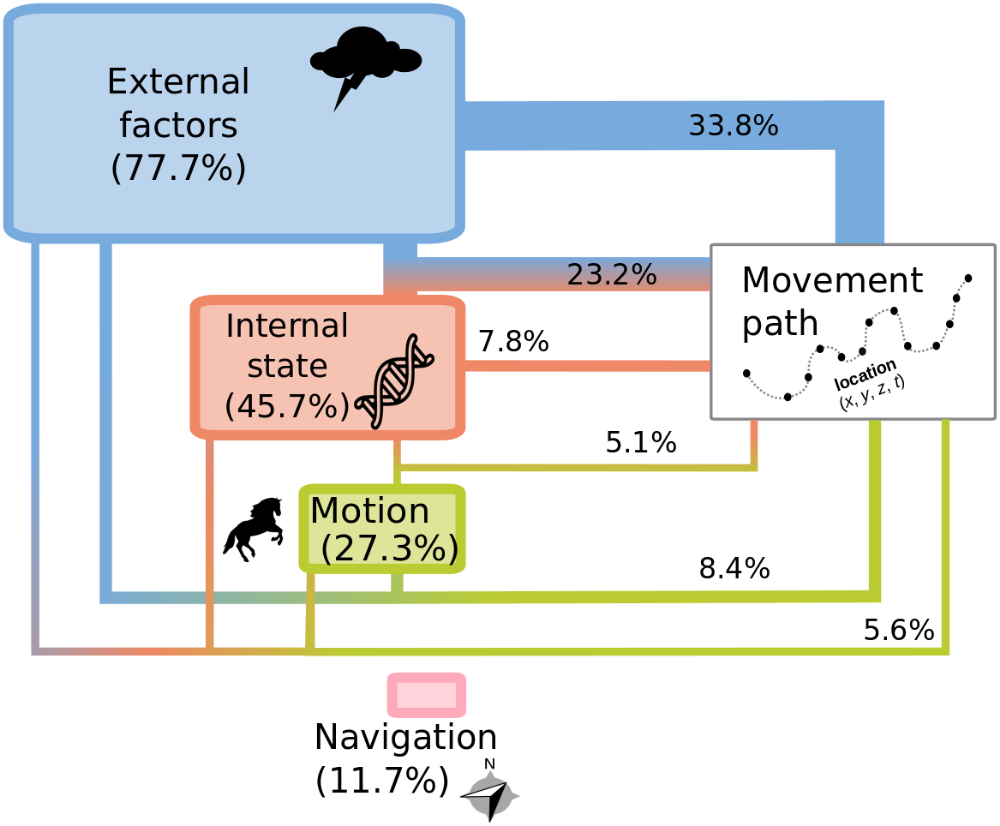

A unifying conceptual framework for movement ecology was proposed in Nathan et al. (2008). It consisted of four components: external factors (i.e. the set of environmental factors that affect movement), internal state (i.e. the inner state affecting motivation and readiness to move), navigation capacity (i.e. the set of traits enabling the individual to orient), and motion capacity (i.e. the set of traits enabling the individual to execute movement). The outcome of the interactions between these four components would be the observed movement path (plus observation errors).

To assess the study of the different components of the movement ecology framework, we built what we call here a “dictionary.” A dictionary is composed of concepts and associated words. Here, the concepts of interest were the components of the framework (i.e. internal state, external factor, motion and navigation), and their associated words were the terms potentially used in the abstracts to refer to the study of each component. For example, terms like “memory,” “sensory information,” “path integration” or “orientation” were used to identify the study of navigation. The framework dictionary is downloadable here.

To assessed how well the dictionary identified the components in the papers, a quality control procedure was established. For each aspect, a random sample of 100 papers was selected, and a coauthor who did not lead the construction of the dictionary was randomly selected to check if in those papers the categories of the dictionary were correctly identified (i.e. accuracy). The accuracy was 91%.

We replicated this analysis for the 1999-2008 period for comparison purposes.

3.3.1 Outputs

| Component | 2009-2018 | 1999-2008 |

|---|---|---|

| External factors | 77.3% | 76.7% |

| Internal state | 49.0% | 45.7% |

| Motion capacity | 26.2% | 27.3% |

| Navigation capacity | 9.0% | 11.7% |

Table 3.4. Framework components. The values are the percentages of abstracts (where information on the framework was gathered) that use terms related to each component.

| External | Internal | Motion | Navigation | 2009-2018 Count | 2009-2018 Percentage | 1999-2008 Count | 1999-2008 Percentage |

|---|---|---|---|---|---|---|---|

| X | - | - | - | 2371 | 33.3 | 418 | 33.8 |

| X | X | - | - | 1768 | 24.8 | 287 | 23.2 |

| - | X | - | - | 663 | 9.3 | 96 | 7.8 |

| X | - | X | - | 485 | 6.8 | 104 | 8.4 |

| X | X | X | - | 424 | 6.0 | 69 | 5.6 |

| - | - | X | - | 383 | 5.4 | 56 | 4.5 |

| - | X | X | - | 373 | 5.2 | 63 | 5.1 |

| X | - | - | X | 176 | 2.5 | 44 | 3.6 |

| X | X | - | X | 136 | 1.9 | 15 | 1.2 |

| - | - | - | X | 83 | 1.2 | 23 | 1.9 |

| X | - | X | X | 73 | 1.0 | 12 | 1.0 |

| - | X | - | X | 50 | 0.7 | 16 | 1.3 |

| - | - | X | X | 49 | 0.7 | 15 | 1.2 |

| X | X | X | X | 45 | 0.6 | 11 | 0.9 |

| - | X | X | X | 41 | 0.6 | 8 | 0.6 |

Table 3.5. Number and percentage of articles in movement ecology of animals and human mobility studying each combination of components of the Movement Ecology Framework for the decades 2009-2018 and 1999-2008. In each row, an X in the column indicates the studied component.

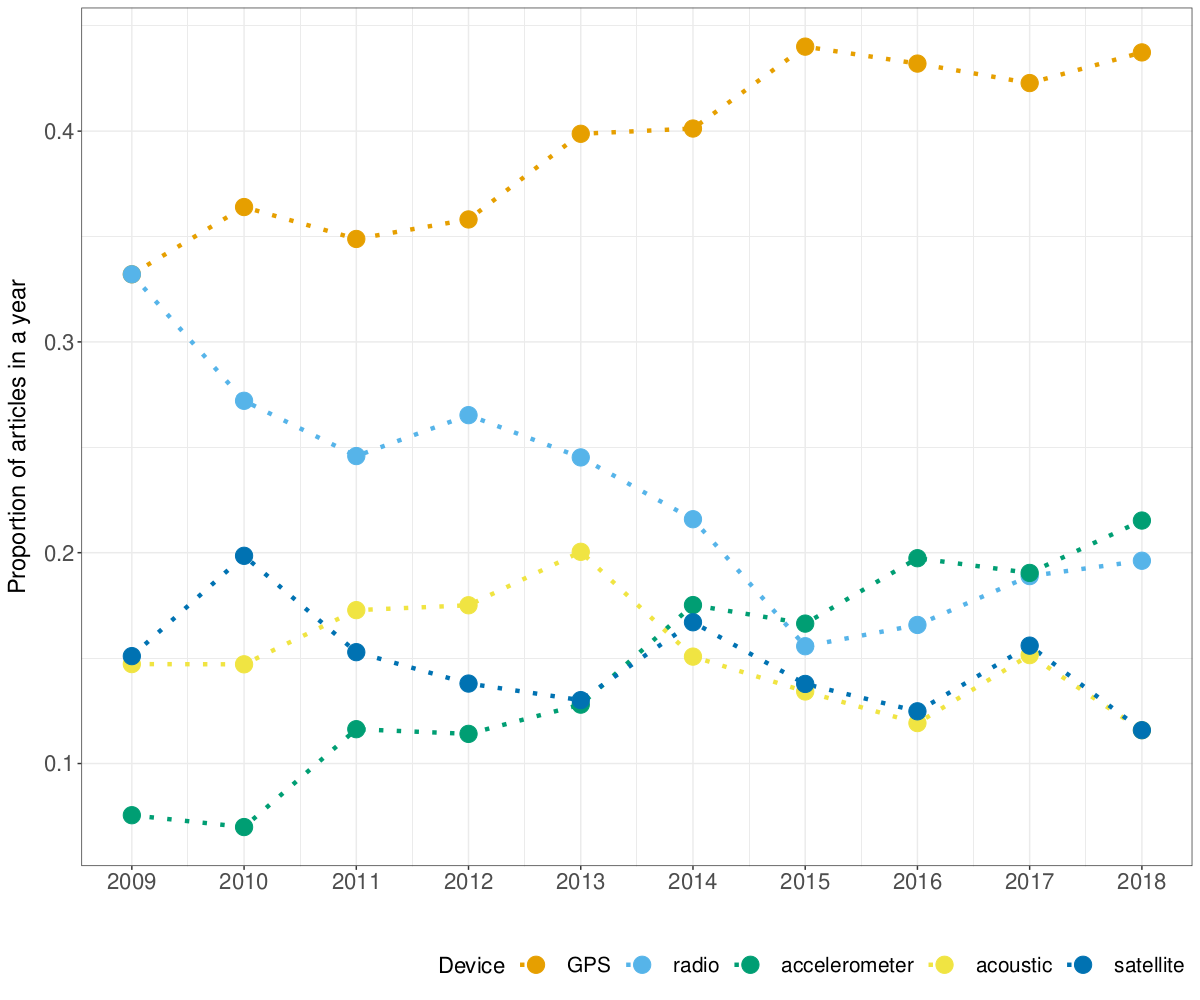

3.4 Tracking devices

We grouped tracking devices in 11 categories. These categories were meant to be as monophyletic as possible, and so broad categories had to be defined. Also, use of one technology does not rule out the use of another technology. E.g. radio + GPS is frequently used, and marine studies frequently have an array of sensors on them that may combine multiple technologies. These categories are:

- Light loggers: Any technology that records light levels and derives locations based on the timing of twilight events.

- Satellite: Any tag that collects location via, and sends data to, satellites, so that data can be accessed remotely. Frequently, the ARGOS system.

- Radio telemetry: Any technology that infers location based on radio telemetry (VHF/UHF frequency). Sometimes it is used in addition to either light logger or GPS technology, though this distinction cannot be readily inferred using our methodology.

- Camera: They include any device that records location/presence via photos

or video; mainly camera traps with known locations where the capture of the

individual implies the location.

- Video: They include any device that records movement via video.

- Acoustic: Any technology that uses sound to infer location, either in a similar way to radio telemetry or in an acoustic array where animal vocalizations are recorded and the location of sensors in the array are used to obtain an animal location.

- Pressure: Any technology that records pressure readings (frequently in the water), and these changes infer vertical movement, such as through a water column.

- Accelerometer: Any technology that is placed on a subject and measures the acceleration of the tag, and this delta infers movement.

- Body conditions: Any technology that uses body condition sensors to collect data on the subject that may be associated with a movement or lack thereof, such as temperature and heart rate.

- GPS: Any technology that uses Global Position System satellites to calculate the location of an object. Can be handheld GPS devices, or animal-borne tags.

- Radar: Any technology that uses “radio detection and ranging” devices to track objects. Can be large weather arrays or tracking radars.

- Encounter: Any analog tracking method where the user must capture the subject and place a marker on the subject. The recapturing/resighting of the subject infers the movement. This category was difficult to capture in our study due to a lack of specific phrases that can be used.

Their use was assessed with a dictionary approach. The dictionary can be downloaded here.

To assess how well the dictionary identified the types of devices in the papers, a quality control procedure was established. For each aspect, a random sample of 100 papers was selected, and a coauthor who did not lead the construction of the dictionary was randomly selected to check if in those papers the categories of the dictionary were correctly identified (i.e. accuracy). The accuracy was 84%.

To assess if we were identifying animal-borne GPS rather than hand-held GPS, we took a sample of 100 papers already associated to GPS according to our dictionary. 83 referred to GPS tags, 12 were hand held, and 5 were neither tag nor hand-held (e.g. GPS coordinates in general, or General Practitioners).

3.4.1 Output

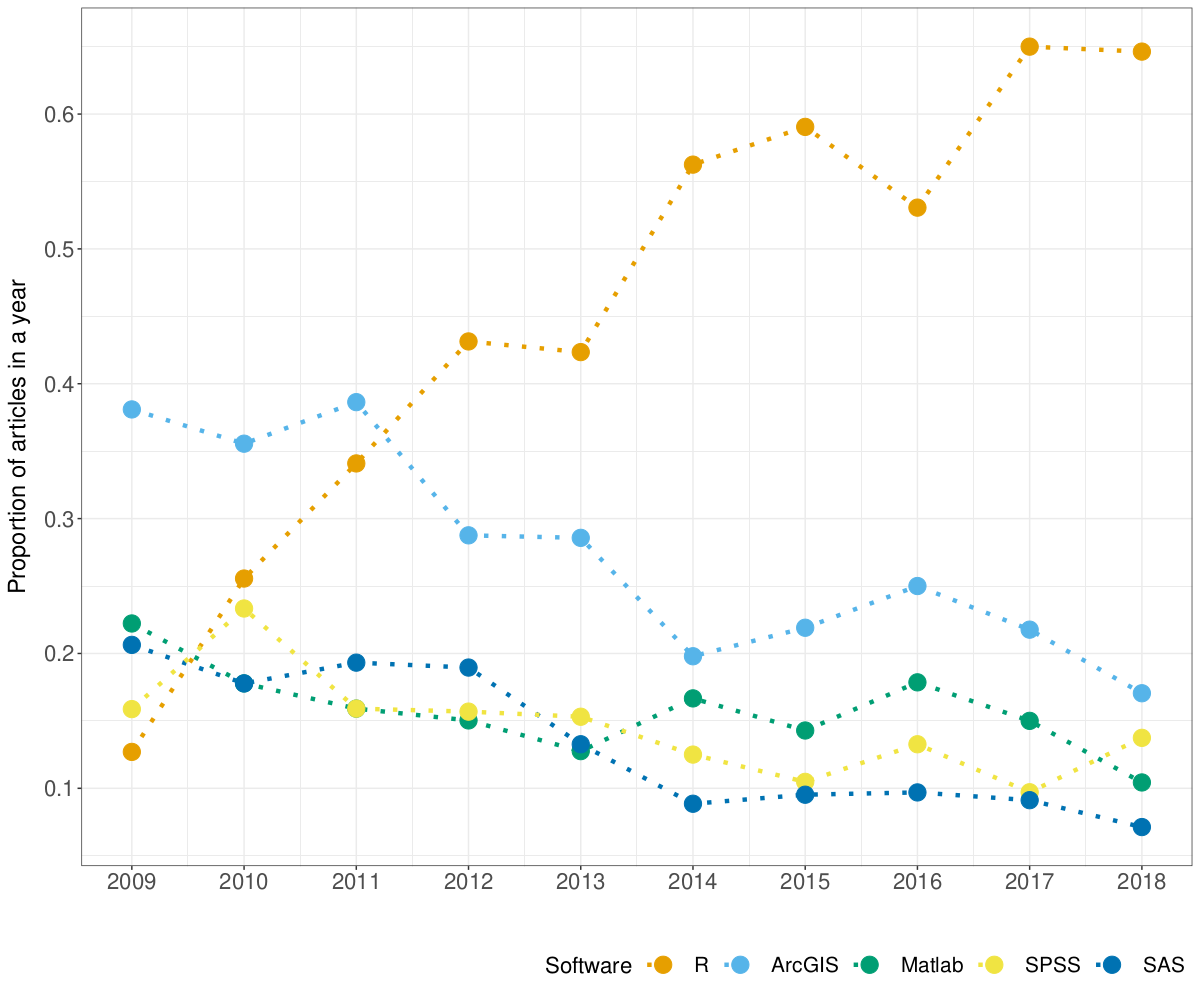

3.5 Software

Here we also used a dictionary approach. We used expert opinion to compile all known software used in movement ecology. The 33 software in our list were 1) R, 2) Python, 3) SPSS, 4) Matlab, 5) SAS, 6) MARK (program Mark and not R package unmarked), 7) Java, 8) C (if researchers wrote C code themselves; i.e some tracking Radars for pre-processing), 9) Fortran, 10) WinBUGS, 11) Agent-Analyst, 12) BASTrack, 13) QGIS, 14) GRASS, 15) Microsoft Excel, 16) Noldus observer, 17) fragstats, 18) postgis (we separated postGIS from the database category because its high spatial analytical capabilities), 19) databases (any relational database, likely for data management and summarizing necessarily for analyitical use), 20) e-surge, 21) m-surge, 22) u-care, 23) Genstat, 24) Biotas, 25) Statview, 26) Primer-e, 27) PAST, 28) STATA, 29) Statistica, 30) UCINET, 31) Mathcad, 32) Vicon, and 33) GME (geospatial modeling environment). The dictionary with the terms used can be downloaded here.

For quality control, we examined a random sample of 50 papers. The accuracy was 88%.

3.5.1 Output

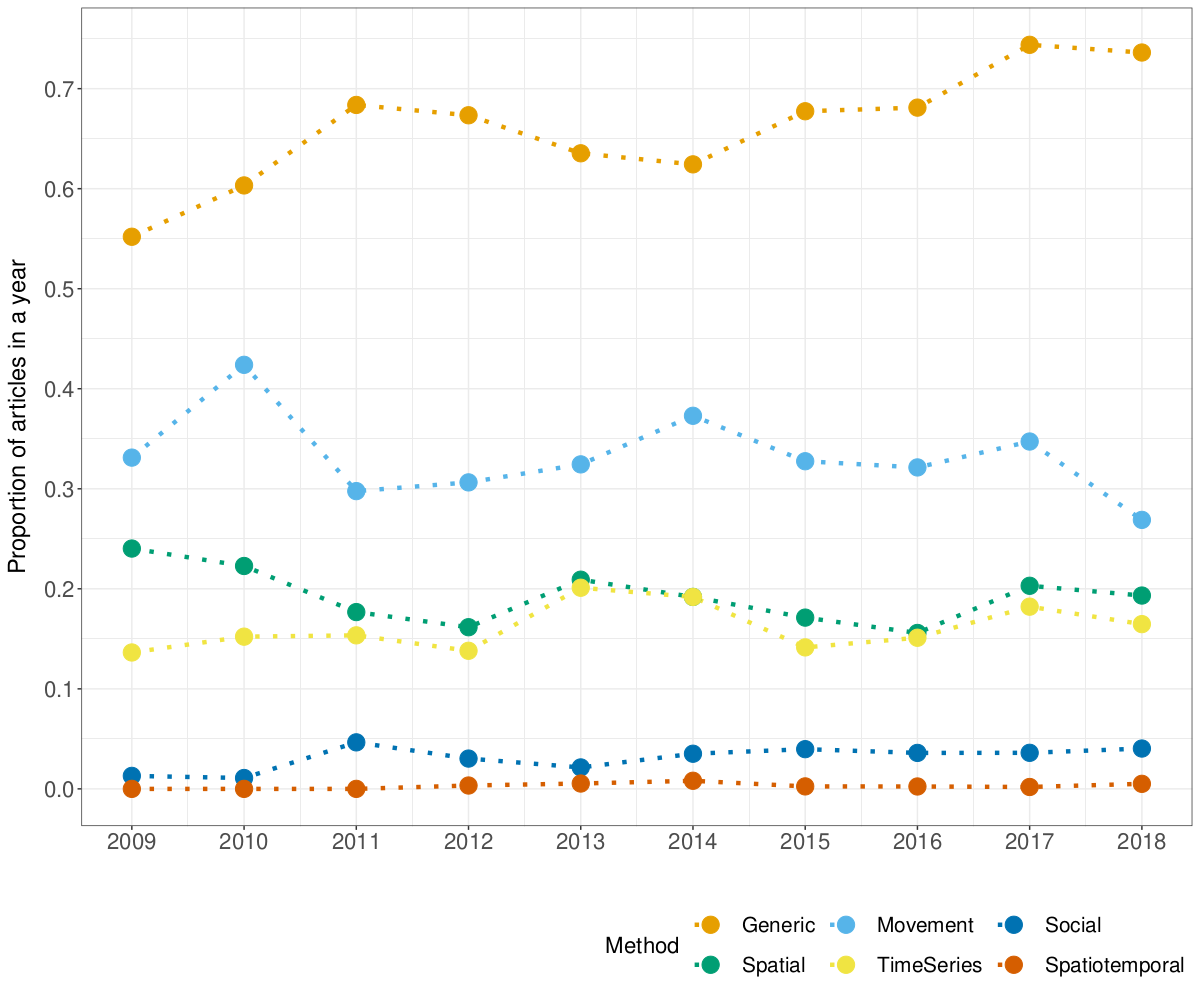

3.6 Statistical methods

Within our dictionary approach, we first used expert opinion to compile all known statistical methods (and terms used for them) that could have been used in movement ecology, resulting in 188 terms; click here to download the corresponding file. We classified statistical methods into Spatial, Time-series, Movement, Spatiotemporal, Social, and Generic. Their definitions are below:

- Spatial: spatial statistical methods (e.g. geostatistics)

- Time-series: time series methods (e.g. functional data analysis)

- Movement: statistical method used for the study of movement (e.g. behavioral change point analysis)

- Spatiotemporal: spatiotemporal but not movement methods (e.g. spatiotemporal geostatistics)

- Social: statistical methods that are not exclusively for movement, but that characterize or model social processes (e.g. social networks)

- Generic: generic statistical methods that could be used in any type of study, that are not inherently spatial, temporal or social (e.g. a regression analysis)

Terms related to hypothesis tests were first considered but ultimately removed; we considered that the tendency of papers to present p-values could be biasing researchers towards the use of hypothesis tests, thus creating a bias towards general methods.

For quality control, we examined a random sample of 50 papers. The accuracy was 84%.

3.6.1 Outputs

| generic | Movement | spatial | time-series | social | spatiotemporal |

|---|---|---|---|---|---|

| 67.8% | 32.6% | 18.9% | 16.5% | 3.3% | 0.3% |

Table 3.6. Percentage of papers using each type of statistical method. The code for this table can be downloaded here.

| trigram | n |

|---|---|

| linear mixed models | 231 |

| linear mixed effects | 229 |

| generalized linear mixed | 202 |

| mixed effects models | 202 |

| linear mixed model | 188 |

| markov chain monte | 180 |

| chain monte carlo | 178 |

| akaike’s information criterion | 174 |

| akaike information criterion | 162 |

| minimum convex polygon | 158 |

| information criterion aic | 146 |

| monte carlo mcmc | 133 |

| correlated random walk | 129 |

| mixed effects model | 117 |

| hidden markov model | 116 |

Table 3.7. Most common statistical trigrams in M&M sections of papers (with more than 100 mentions in papers). To reconstruct this table, readers can refer to this downloadable code.

*The code to process the dictionaries of the framework, tracking devices, software, statistical methods, and taxonomy is downloadable here.